by Huenei IT Services | Nov 8, 2023 | Process & Management

Decision Making Model for IT Services

A business is determined and guided by the decisions we make and the ones we don’t make. We are faced with tons of decisions every day, although only a few of them are key for our company and end up impacting our business significantly. In order to seek the success of an organization, we need to leverage criteria and a decision-making model that allow us to be objective and decide based on the interests of the company.

But… What is decision making? It is the process of choosing a specific alternative from a more comprehensive set, based on the strategic analysis of information and the evaluation of alternative resolutions. In this sense, we compare different alternatives and courses of action to select the one that we project will translate into better results for the company, in search of organizational efficiency and effectiveness.

How are the decisions characterized?

First, we have to understand that there are times when we know the risk associated with a decision, while in other circumstances it is very difficult to estimate it. In this sense, we can speak of risk, uncertainty and ignorance kinds of decisions.

| Risk Decisions |

Uncertainty Decisions |

Ignorance Decisions |

| It is possible to calculate the risk associated with the decision. The event is known, as well as the chances of success. |

There is a risk but it is difficult to identify it. The event is known, but the probability of success is unknown. |

In this case chance is involved. These are unknown events and therefore unknown probabilities of success. |

Depending on their frequency, decisions are classified as scheduled and unscheduled. Scheduled decisions are those that we make frequently and that, therefore, represent repetitive decisions. To address them, it is advisable to develop rules, policies or procedures that speed up analysis and action. On the other hand, unscheduled decisions are one-off and non-recurring, which is why they are characterized by requiring a customized resolution. It is necessary to address them from more extensive and standardized decision-making processes.

As you may understand, we should try to minimize ignorance decisions. As for decisions that are characterized by being uncertain and unscheduled, we can standardize the process in the way that we will discuss below.

How to handle uncertain and unscheduled decisions.

The decision-making process always begins with a problem, which is understood as a situation in which the desired performance is different from the actual performance. It is relevant to have a complete and concrete definition of the situation, in order to understand our problem in a comprehensive way. In this sense, poorly posed problems are related to the waste of resources, the loss of opportunities and the application of wrong solutions.

Let’s look at a practical example…

Suppose that a company is having problems in the IT area, because the team is having issues with development times, that is, the average development time of its projects is greater than the time stipulated and agreed with the interested areas. To define the problem more specifically, 80% of the projects in the last 6 months took 30% longer than stipulated.

The strategic impediment that this problem represents is that the company begins to present bottlenecks in important areas of its operations. Then, the strategy area of the company defines outsourcing the development service for the most important projects. The challenge now will be to determine which software development service provider will be the best for the job.

- Definition of the criteria to evaluate the alternatives. Criteria refers to the relevant aspects for making a certain decision in particular. When we identify a problem, we must identify the relevant criteria in order to solve it. Continuing with the previous example, the criteria defined are: Cost, Experience, Communication, and Customer reviews.

- Weighting of the criteria. When it comes to weighing, what we do is define which criteria are more important than others, since not all have the same relevance for the company. In this sense, we define a score for each criterion based on its relevance and strategic implication.

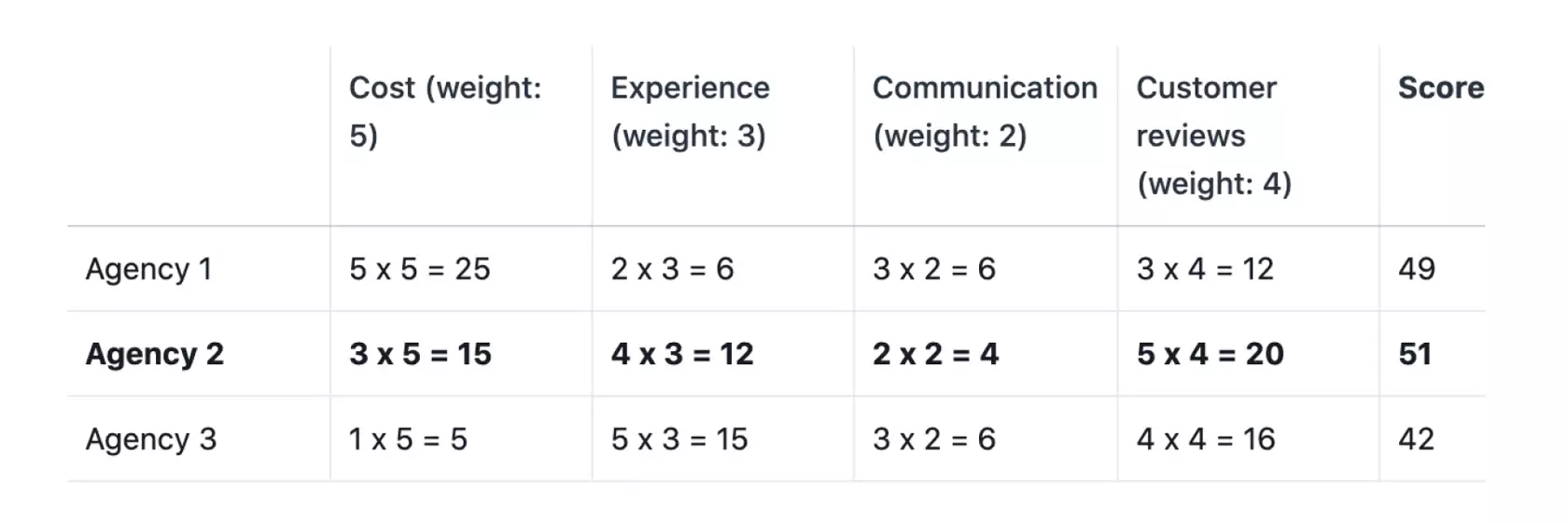

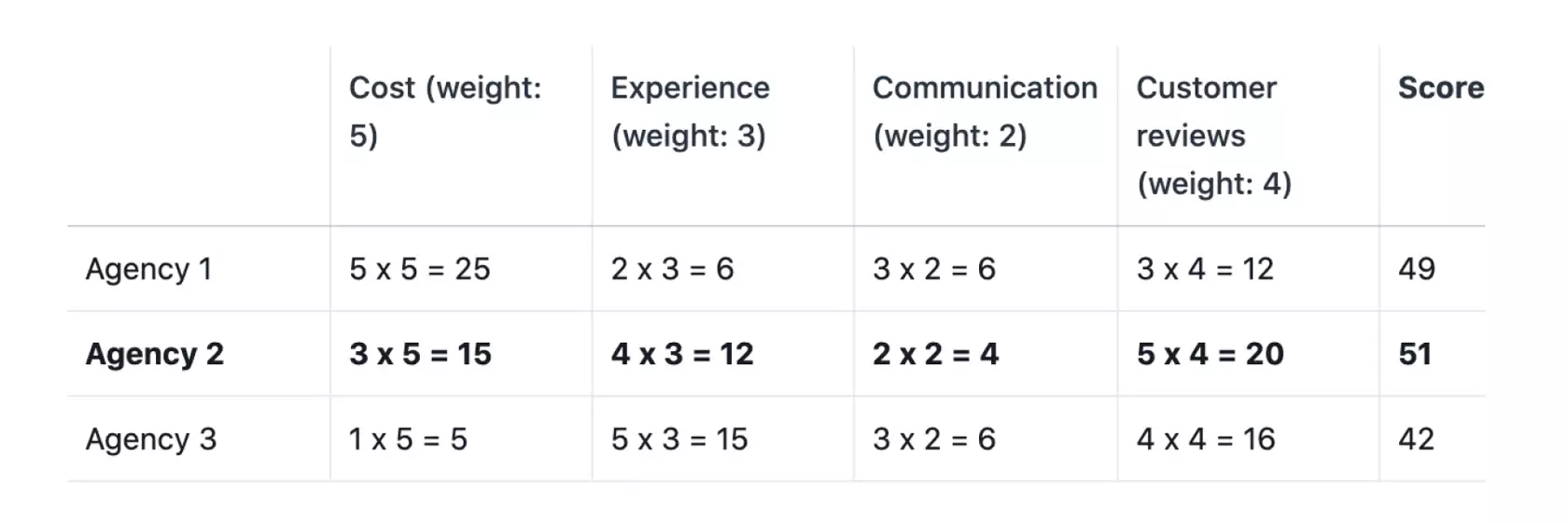

- Decision matrix. Finally, for each alternative, we value the criteria and multiply them by the weights. Below you can see a pretty clear example of a decision matrix being used.

Continuing with the previous case, the selected supplier would be Huenei’s Turnkey Projects, since it is the one that can achieve a higher general score, considering the decision criteria.

by Huenei IT Services | May 9, 2023 | Artificial Intelligence

Predictive analytics powered by artificial intelligence have immense potential to revolutionize healthcare and other industries.

By analyzing vast amounts of patient data, AI algorithms can identify individuals at risk for certain diseases and predict which treatments will be most effective for each patient. In this article, we explore how AI-enabled predictive analytics tools can help healthcare organizations achieve key objectives.

Detecting Diseases Earlier

One major healthcare goal is detecting diseases at the earliest stages when they are most treatable. AI predictive analytics support this mission by pinpointing patients likely to develop illnesses based on risk factors in their data. Doctors can then take preventative action with lifestyle changes or early interventions before diseases progress, improving outcomes.

Improving Patient Outcomes

Healthcare aims to enhance patient outcomes. AI predictive analytics support this by forecasting how patients will likely respond to different treatments. Doctors can then customize treatment plans to each patient’s predicted needs, boosting the chances of successful therapies.

Reducing Costs

Lowering healthcare expenses is a constant pursuit. AI predictive analytics curb costs by reducing ineffective therapies. Algorithms analyze patient data to determine optimal treatments, avoiding expensive trial-and-error approaches.

Enabling Personalized Medicine

Precision medicine is rising, with treatments tailored to individuals. AI predictive analytics are key, assessing genetics, lifestyles, and health histories to create personalized plans. This leads to more targeted, effective care.

Boosting Population Health

AI predictive analytics also identify health trends across populations by processing large datasets. Providers can then develop focused interventions to boost community-wide outcomes.

At Huenei, we specialize in ethical, privacy-focused AI development including predictive analytics. Our solutions enable organizations to leverage AI while protecting patient data through strong security policies. Contact us today to explore how our AI expertise can help your healthcare organization pursue vital goals.

by Huenei IT Services | May 6, 2023 | Artificial Intelligence

How can you make a Chat GPT integration with OpenAI models into a software development successfully?

Technology advances by leaps and bounds and provides us with more solutions and possibilities to explore in the world of development, which can take us to unimagined places. The need to be constantly at the forefront of this range of possibilities, leads us to be in training and learning 24/7, which allows us to incorporate new expertise to, for example, integrate OpenAI models within projects with cutting-edge technology, such as a Chat GPT integration.

In this blog post we want to share with you how together with one of our large clients we have managed to implement a concrete business case where we made a Chat GPT integration into a custom software solution.

The objective of the application is to provide a dynamic and flexible training platform for the sales force of a renowned pharmaceutical laboratory, with the ability to obtain online information without the need to perform previous data uploads, saving costs and time.

The software solution, beyond including standard user, group and profile administration functionalities, contains modules related to training management: roles, suggested exams per role, exam form and results tracking per exam, per role and per group.

The important innovation we achieved is the integration with Chat GPT combining two of its main functionalities: Information Search and Text Analysis.

After a series of concept tests carried out by our team of Prompt Engineers together with business specialists on the client’s side to refine the parameters that allow us to obtain information in an accurate, reliable and fair way in terms of the amount of bytes sent and received to optimize costs, we concluded the following:

- We use “Information Search” to obtain online information related to drug types, typical information contained in a drug package insert.

- We use “Text Analysis” to compare the text of the information obtained versus the text of the answer entered by the user and according to the % of accuracy obtained we give a score to his answer.

The sum of your scores will give you a final result that is recorded and will be part of your training record through integration with your LMS (Learn Management System).

The results are amazing with a tremendous positive impact for the client in terms of cost and time due to the high degree of automation of the process for training your sales force.

by Huenei IT Services | May 6, 2023 | Artificial Intelligence

Organizations are constantly seeking ways to boost productivity, streamline processes, and improve customer experience; Generative AI is helping them achieve that thanks to OpenAI benefits.

Generative AI can be particularly useful in business software applications in several ways. Let’s go through some OpenAI benefits:

- Data Analysis: Generative AI can be used to analyze large amounts of data and identify patterns and trends. This can help businesses make better decisions and optimize their operations. For example, generative AI can be used to analyze customer data to identify buying patterns and preferences, which can help businesses tailor their marketing strategies to specific customer groups.

- Personalization: Generative AI can be used to personalize the user experience in applications by generating customized content for each user. For example, a news application can generate personalized news articles for each user based on their reading habits and interests.

- Training: Generative AI can be used to create customized content training in many subjects for different departments of your organization. (Sales force, technical training, etc.)

- Predictive Maintenance: Generative AI can be used to predict equipment failures and maintenance needs by analyzing data from sensors and other sources. This can help businesses avoid costly downtime and reduce maintenance costs by performing maintenance only when needed.

- Regionalization: Generative AI can be used to regionalize your app to different languages and expand its global reach.

Generative AI can help businesses streamline operations, reduce costs, and make better decisions by leveraging the power of data analysis and machine learning. However, businesses must exercise quality control over the generated content to ensure its accuracy and consistency.

In conclusion, Generative AI is proving to be a game-changer for businesses looking to improve their operations and customer experience. OpenAI benefits organizations by providing them with advanced data analysis, personalization, training, predictive maintenance, and regionalization capabilities. By leveraging these benefits, businesses can increase productivity, reduce costs, and make better decisions. However, it is crucial for businesses to ensure the accuracy and consistency of the results through quality control measures. With OpenAI model-powered development services, businesses can create customized models that deliver real results and take their operations to new heights.

As a provider of OpenAI model-powered development services we can help you create custom models that deliver real results and take your business to new heights.

by Huenei IT Services | May 6, 2023 | Process & Management

Digital product development management is a complex process that involves several steps, from idea generation to product launch and post-launch evaluation. To ensure success, it is important to understand the key aspects of digital product development management and best practices for effective management.

In this article, we will explore the steps involved in digital product development management and provide a checklist for successful management. But first, let’s discuss what digital product development management is and why it is important.

Understanding Digital Product Development Management

Digital product development management is creating and managing digital products, such as software, applications, and websites. Effective digital product development management ensures that products are designed and developed with the end user in mind, and are delivered on time and within budget.

This activity has several benefits, including improved product quality, faster time-to-market, and increased customer satisfaction. However, managing product development can also be challenging due to the constantly evolving digital landscape and the need to stay ahead of the competition.

Critical Steps in Digital Product Development Management

Product development management involves a series of key steps that are critical to the success of any digital product. By following these steps and incorporating best practices, businesses can increase their chances of creating successful digital products that meet the needs of their target audience. Let’s outline the five key steps in product development management and provide tips and best practices for each step.

- Idea generation and concept development: This is the first step in the development of digital products. During this phase, ideas are generated, and concepts are developed. It is important to involve all stakeholders, including developers, designers, marketers, and business analysts.

- Market research and competitive analysis: In this step, market research is conducted to understand the needs and preferences of the target audience. Competitive analysis is also conducted to identify potential competitors and understand their strengths and weaknesses.

- Product design and prototyping: During this phase, the product design is created, and prototypes are developed. User feedback is collected to refine the design and ensure that it meets the needs of the target audience.

- Development and testing: This is the phase where the product is developed and tested. It is important to ensure that the product is developed according to the design and that it meets the needs of the target audience.

- Launch and post-launch: In this step, the product is launched, and a post-launch evaluation is conducted to determine whether the product is meeting the needs of the target audience. User feedback is collected and analyzed to identify areas for improvement.

Best Practices for Digital Product Development Management

To ensure successful product development management, it is important to follow best practices. Some of these best practices include:

- Team collaboration and communication: Effective collaboration and communication among team members are essential for successful product development. Regular team meetings and clear communication channels should be established.

- Effective project management: Effective project management is critical for succeeding in the development of digital products. Project milestones should be established, and progress should be regularly monitored.

- Utilizing Agile methodologies: Agile methodologies are becoming increasingly popular in digital product development management. Agile methodologies involve iterative development, which allows for flexibility and the ability to quickly respond to changes.

- Incorporating user feedback throughout the development process: User feedback should be collected and analyzed throughout the development process to ensure that the product meets the needs of the target audience.

Checklist for Successful Digital Product Development Management

To ensure successful product development management, at Huenei we use a checklist that summarizes all the important aspects of the process. We share it with you below so that you can take advantage of our experience and our work methodology in your projects:

- Establish clear goals and objectives for the product.

- Conduct market research and competitive analysis.

- Involve all stakeholders in idea generation and concept development.

- Create a detailed product design and develop prototypes.

- Develop the product and test it.

- Launch the product and collect user feedback.

- Analyze user feedback and make necessary improvements.

- Regularly monitor progress and adjust the development plan as necessary.

- Utilize Agile methodologies for flexibility.

- Communicate regularly with team members and stakeholders.

All in all, digital product development management is a critical process that requires careful planning and execution. By following best practices and utilizing our proposed checklist, businesses can ensure that their products are developed and launched successfully. By involving all stakeholders in the process, conducting market research, and incorporating user feedback throughout the development process, businesses can create products that meet the needs and preferences of their target audience.

Effective communication and collaboration among team members, as well as utilizing Agile methodologies, can also contribute to the successful development of digital products. By regularly monitoring progress and making necessary adjustments, businesses can ensure that their digital products are delivered on time and within budget.