We previously learned about the benefits of Hardware Acceleration with FPGAs, as well as a few Key concepts about acceleration with FPGAs for companies and teams. In this article, we will learn about the applications that typically benefit from employing this technology.

First, we will compare distributed and heterogeneous computing, highlighting the role of FPGAs in data centers. Then, we will present the most widespread uses of Acceleration with FPGAs, such as Machine Learning, Image and Video Processing, Databases, etc.

Distributed vs. Heterogeneous Computing

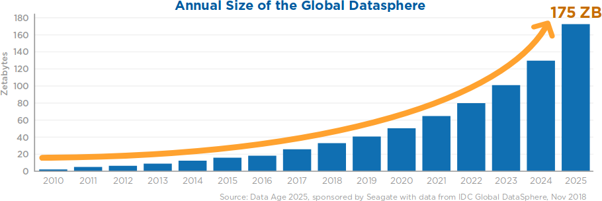

Over the past 10 years, we have witnessed an exponential growth in the generation of data. This in part thanks to the rise and popularity of electronic devices, such as cell phones, Internet of Things (IoT), wearable devices (smart-watches), among many others.

At the same time, the consumption of higher quality content by users has been increasing, a clear example being the case of television and/or streaming services, which have gradually increased the quality of content, resulting in a greater demand for data.

This growth in the creation/consumption of data brought the appearance of new computationally demanding applications, capable of both leveraging data and aiding in their processing.

However, an issue can arise with processing times, which directly affects the user experience, making the solution impractical. This raises the question: how can we reduce execution times to make the proposed solutions more viable?

One of the solutions consists of using Distributed Computing, where more than one computer is interconnected to a network to distribute the workload. Under this model, the maximum theoretical acceleration to be obtained is equal to the number of machines added in the data processing. Although it is a viable solution, it offers the problem that the time involved in distributing and transmitting data over the network must be considered.

For example, if we want to reduce data processing time to one third, we would have to configure up to four computers, which would skyrocket energy costs and the necessary physical space.

Another alternative is to use Heterogeneous Computing. In addition to using processors (CPUs) for general purposes, this method seeks to improve the performance of one computer by adding specialized processing capabilities to perform particular tasks.

This is where general-purpose graphics cards (GPGPUs) or programmable logic cards (FPGAs) are used, one of the main differences being that the former have a fixed architecture, while the latter are fully adaptable to any workload, in addition to a smaller energy consumption (since they generate the exact Hardware to be used, among other reasons).

Unlike Distributed Computing, in Heterogeneous Computing, acceleration will depend on the type of application and the architecture developed. For example, in the case of databases, the acceleration may have a lower frequency than a Machine Learning inference case (which can be accelerated by hundreds of times); another example would be the case of financial algorithms, where the acceleration rate is given in the thousands.

Additionally, instead of adding computers, boards are simply added in PCIe slots, saving resources, storage capacity, and energy consumption. This results in a lower Total Cost of Ownership (TCO).

FPGA-based accelerator cards have become an excellent accesory for data centers, available both on-premise (own servers) and in cloud services like Amazon, Azure, and Nimbix, among others.

Applications that benefit from hardware acceleration with FPGAs

In principle, any application involving complex algorithms with large volumes of data, where processing time is long enough to mitigate access to the card, is a candidate for acceleration. Besides, the process must be carried out through parallelization. Some of the typical solutions for FPGAs which respond to these characteristics are:

One of the most disruptive techniques in recent years has been Machine Learning (ML). Hardware acceleration can bring many benefits due to the high level of parallelism and the huge number of matrix operations required. These can be seen both in the training phase of the model (reducing this time from days to hours or minutes) and in the inference phase, enabling the use of real-time applications, like fraud detection, real-time video recognition, voice recognition, etc.

Image and Video Processing is one of the areas most benefited by acceleration, making it possible to work in real-time on tasks such as video transcoding, live streaming, and image processing. It is used in applications such as medical diagnostics, facial recognition, autonomous vehicles, smart stores, augmented reality, etc.

Databases and Analytics receive increasingly complex workloads due to advances in ML, forcing an evolution of data centers. Hardware acceleration provides solutions to computing (for example, with accelerators that, without touching code, accelerate PostgreSQL between 5-50X or Apache Spark up to 30x) and storage (via smart SSDs with FPGAs).

The large volumes of data to be processed require faster and more efficient storage systems. By moving information processing (compression, encryption, indexing) as close as possible to where the data resides, bottlenecks are reduced, freeing up the processor and reducing system power requirements.

Something similar happens with Network Acceleration, where information processing (compression, encryption, filtering, packet inspection, switching, and virtual routing) moves to where the data enters or leaves the system.

High-Performance Computing (HPC) is the practice of adding more computing power, in such a way as to deliver much higher performance than a conventional PC, to solve major problems in science and engineering. It includes everything from Human Genome sequencing to climate modeling.

In the case of Financial Technology, time is key to reducing risks, making informed business decisions, and providing differentiated financial services. Processes such as modeling, negotiation, evaluation, risk management, among others, can be accelerated.

With hardware acceleration, Tools and Services can be offered to process information in real-time, helping automate designs and reducing development times.

Summary

Making a brief comparison between the models, in Distributed Computing, more than one computer is interconnected in a network and the workload is distributed among all of them. This model, used for example by Apache Spark, is highly scalable but has a high energy consumption and requires a large physical space, which increases proportionally.

As for Heterogeneous Computing, the performance of one computer is improved by adding hardware (for example, via graphics cards such as GPGPUs or FPGAs), adding specialized processing capabilities. This makes it possible to obtain acceleration rates that depend on the type of application, but that can be, in some cases, between 1-10X (for example, when using Databases) up to hundreds or thousands of times when using Machine Learning.

Through a profiling and validation analysis of the feasibility of parallelizing the different processes and solutions, we can determine if FPGA Hardware Acceleration is the ideal solution for your company, especially when working with complex algorithms and large data volumes.

In this way, your business can improve user satisfaction rates by offering a faster and smoother experience with reduced processing times; additionally, and thanks to the reduction of TCO, your budget control can be optimized.